Can we trust AI when it comes to biology?

AI has rapidly demonstrated its versatility: writing code, solving math problems, and answering physics questions. A large language model (LLM) could probably write this article faster and better than I can (although it still can’t conduct peer review or design wet-lab experiments). AI can search, parse and synthesize more information in a moment than you or I could in a month, which is why it is being steadily integrated into workstreams across most industries. But not everywhere.

The pharmaceutical industry (and biosciences more broadly) should be an obvious target for AI. Biological science treats the human body as a system that can be understood, modelled, and affected, much like physics - where AI has already been successful (for example in weather forecasting). But pharma is still skeptical. Why? And what can AI industries do to address those concerns?

Probing the problems

Data is the first hurdle. Pharmaceutical-grade models train on biological and clinical data that are often messy and incomplete (unlike curated databases, like that used to train the successful AlphaFold). Published assays are biased towards positive results, metadata are sparse, and standards vary across labs. Data overrepresents privileged populations (often of European ancestry), missing important genetic variations and skewing model results. All of this makes model predictions brittle - they can seem to perform well on training data but fail when confronted with novel scenarios.

Second, modern AI architectures are often opaque. This means that AI models offer correlations and predictions without being able to show or explain their underlying biological reasoning. So research teams are asked to take AI results on faith, without being able to interrogate the biological reasons behind the model outcome. This undermines further discovery.

Lastly, LLMs - the backbone of modern agentic AI systems - hallucinate. They can invent references, fabricate assay numbers, or over-generalise from limited examples (see this paper). They even cite retracted work without warning. In the pharmaceutical industry, the decision to move forward with costly preclinical or clinical trials can depend on subtle signals or one or two key experiments. The risk that a signal could be hallucinated has a chilling effect on AI adoption - especially when that error might only be uncovered after years of expensive trials.

So how have AI developers for the pharmaceutical and biotech industry been tackling these challenges?

Seeing through hallucinations

Perhaps the clearest avenue for improvement is in model accuracy. Hallucination is a byproduct of the structure of LLMs - as such it cannot be removed from models entirely, but it can be mitigated.

Retrieval Augmented Generation is the most widespread technique for improving LLM accuracy. An LLM makes a separate search of the internet (or scientific literature) which it uses to mould its answer generation, ensuring answers are closer to the reality of the source literature. This technique grounds claims in source documents, reduces fabrication and enables link‑level audit. RAG is now standard for LLMs that tackle biological questions. But a complementary way of achieving better results is through LLM fine-tuning on specialist training data. Fine-tuning allows an existing LLM to understand domain-specific vocabulary, capture patterns in your dataset’s style or logic and learn from examples of correct behaviour. This is the new frontier to improve scientific LLM accuracy and is already showing progress (as with OwkinZero).

Another way to address the potential lack of accuracy of AI answers is through uncertainty quantification (UQ). UQ tells a user when a prediction leaves the model’s competence envelope, therefore when to trust the output or to run an experiment. Recent work has pushed these methods into graph neural networks and molecular models with promising results (see our recent paper).

Explaining the inexplicable

To solve the “black-box” nature of AI, it must be made explainable. In the case of image analysis, AI models can be designed to highlight which areas of the image features are driving AI predictions (e.g. in MSIntuit CRC and RlapsRisk). This allows biologists to examine the features within those areas to evaluate whether a model has identified a biological feature that could explain the model’s result.

LLMs can also be asked to explain themselves, i.e. to reason about why they provided an answer. Most major models cannot explain the biological reasoning behind their answers at the moment. But work is now underway training models on high-quality curated biological datasets (as with OwkinZero) which helps models to achieve biologically grounded reasoning. This will improve the quality of their explanations in this domain.

The data puzzle is the hardest to solve. Most models need large amounts of data to train on. Those datasets are not perfect. However, recognizing this, AI companies are increasingly producing their own large datasets on which to train their models. Recursion does this with 2D in vitro cell assays and Owkin does this with in vitro organoids and patient multiomics data (through MOSAIC). It is still too early to judge if this approach will provide dividends that outweigh the upfront costs.

All in all, the AI world is trying hard to prove itself reliable to the pharmaceutical industry. But, after an initial surge in investment, Pharma is still waiting for a step-change in the power of AI to fully trust it. We are not there yet, but there are signs that this is on the horizon.

An uneven playing field

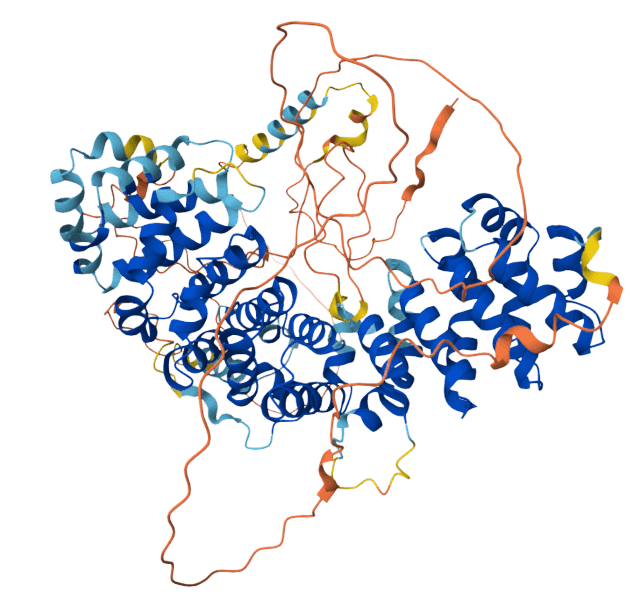

AI is not entirely unsuccessful at analysing biology. AI diagnostics - where digital pathology images can be analysed by AI to provide predictions of genotype, biomarker and clinical prognosis - have been shown to work to an expert level (e.g. MSIntuit CRC). At a biomechanical level, DeepMind’s AlphaFold is an AI breakthrough that has transformed a decades-old bottleneck. In many cases it predicts three-dimensional structures at near-experimental accuracy, and it reports confidence scores that correlate with empirical error. The cascade effect of DeepMind’s success has been immediate: researchers use predicted structures for target selection, mutational scans, and as priors for molecular docking. This is the promise of good AI. In a similar vein to DeepMind’s structural biology, AI-designed drugs do already exist on the market - but where AI has only been used to find their optimal structure for target binding. Here, AI can add value.

But de novo drug discovery presents a more complex challenge: eight leading AI drug discovery companies had 31 drugs in human clinical trials as of 2024: 17 in Phase I, five in Phase I/II, and nine in Phase II/III. These are modest numbers given the investment and hype. The problem is not prediction accuracy but biological complexity: designing a molecule that folds correctly is easier than designing one that navigates the body's regulatory systems, reaches its target and produces therapeutic benefit without toxicity.

In search of a feeling

Remember how you felt the first time you used chatGPT? Wow, that’s actually amazingly good!

That moment hasn’t happened yet for Pharma. But there are many companies trying to get there. From our perspective at Owkin, we think that the recent advances in agentic AI - coupled with research into true biological reasoning - will make that step-change to trusted AI.

We launch K Pro - our agentic AI co-pilot - in October to build towards that ‘wow’ moment for pharma with AI. Our view is that by training agents to specialize in different aspects of biology (with true reasoning capabilities - as with OwkinZero), we can start seeing reasoning at a level that provides real, surprising, delightful insight to pharma users.

And our vision is that, over time, this platform will evolve into a Biological Artificial SuperIntelligence (BASI). That would be a real step-change, both to our customers and to healthcare as a whole.