Data protection is a hard problem for agentic AI - how do we solve it?

Agentic AI systems don’t just answer search queries. AI agents plan, call tools, write and run code, and traverse sensitive data on behalf of a user. That is powerful autonomy, and it raises concerns around data protection and privacy. The risks are concrete:

- inadvertent data exfiltration

- over‑broad permissions

- unclear data lineage

- opaque model memory

In biomedicine, we handle patient‑level multi‑modal biological data, omics, imaging and clinical records. For AI in the pharmaceutical industry the risks around data protection are amplified by regulation and by the ethical duty to protect patient privacy and avoid bias.

For example, a user might prompt a drug discovery AI agent to investigate a particular target. The agent might then independently access molecular databases, review internal research documents, analyze competitor patents, and cross-reference manufacturing cost data to identify and characterize promising compounds. Does the agent have rights on the underlying data, was this usage part of the original intent?

The problem is compounded by the fact that these AI systems often need to integrate data from multiple sources to be effective. An AI agent optimizing clinical trial design might require access to proprietary clinical trial data, patient recruitment strategies, internal efficacy data, cost models, and regulatory submission templates. Each additional data source exponentially increases the need for strict IP controls.

So how can we design agentic AI that gives us confidence in its data protection? Current solutions to this problem fall into two broad categories. First, we can actively manage the data that AI agents have access to. Second, we can adapt the data itself.

Actively manage data access for AI agents

Ideally, agents should receive only the data and tools strictly necessary for any task. But agents often require very granular data to function well - and besides we may not know exactly what data would best suit answering the question, that’s part of what the AI agent is working out. Here are three broad-brush solutions to the problem, creating an organizational and operational environment ready for agents.

Access control systems create multiple security perimeters around different classes of proprietary data (see this research). Agentic AI systems receive tiered access permissions, with the most sensitive IP requiring explicit authorization for each access attempt. This is an analogue of the existing data governance and data access systems for different employees in most companies. One should even envisage a situation where certain agents have more rights than a specific employee - and would have to tailor their output to the recipients role.

A digital ‘sandbox’ keeps an agentic AI within secure enclaves and trusted execution environments. This ensures that even if the AI is compromised, proprietary data remains encrypted and protected. However, the agentic system would only have access to data placed in the sandbox to start with. For example, IMDA’s privacy enhancing technology sandbox uses a trusted execution environment (TEE) to safely analyze pharmaceutical product movement data. In the EU, EUSAIR is doing a similar thing.

Granular permissioning includes a human in the loop whenever an agent tries to access a new dataset - a real-time alert lets the program owner know and they can give or refuse permission (see this research). In fact, most cloud-based applications have a form of zero-trust architecture, in which no agent component can call a resource, tool or another agent without having a live and valid auth token.

Adapt the data to the agents

If limiting access to data is an imperfect solution, it is also possible to change aspects of the data itself to mitigate risks around data protection.

With federated data access, data can be kept siloed, but model weights can be sent to train on the data. In this way, the algorithms learn “on location”, bringing insights back from the data without the data ever leaving its home. Agentic systems could then use the trained models, without having to see the data itself. Owkin has been at the forefront of federated learning for many years now (find out more here).

Proprietary data masking provides AI systems with statistically representative data while obscuring specific molecular structures, formulations, or processes. For example, an AI agent might work with anonymized compound effectiveness data without seeing actual chemical structures. This would maintain analytical value while protecting IP.

Some data can be watermarked, embedding invisible markers in proprietary datasets that allow companies to trace if their data appears in unauthorized locations. In this way, they can monitor how data flows through their systems and detect potential leaks.

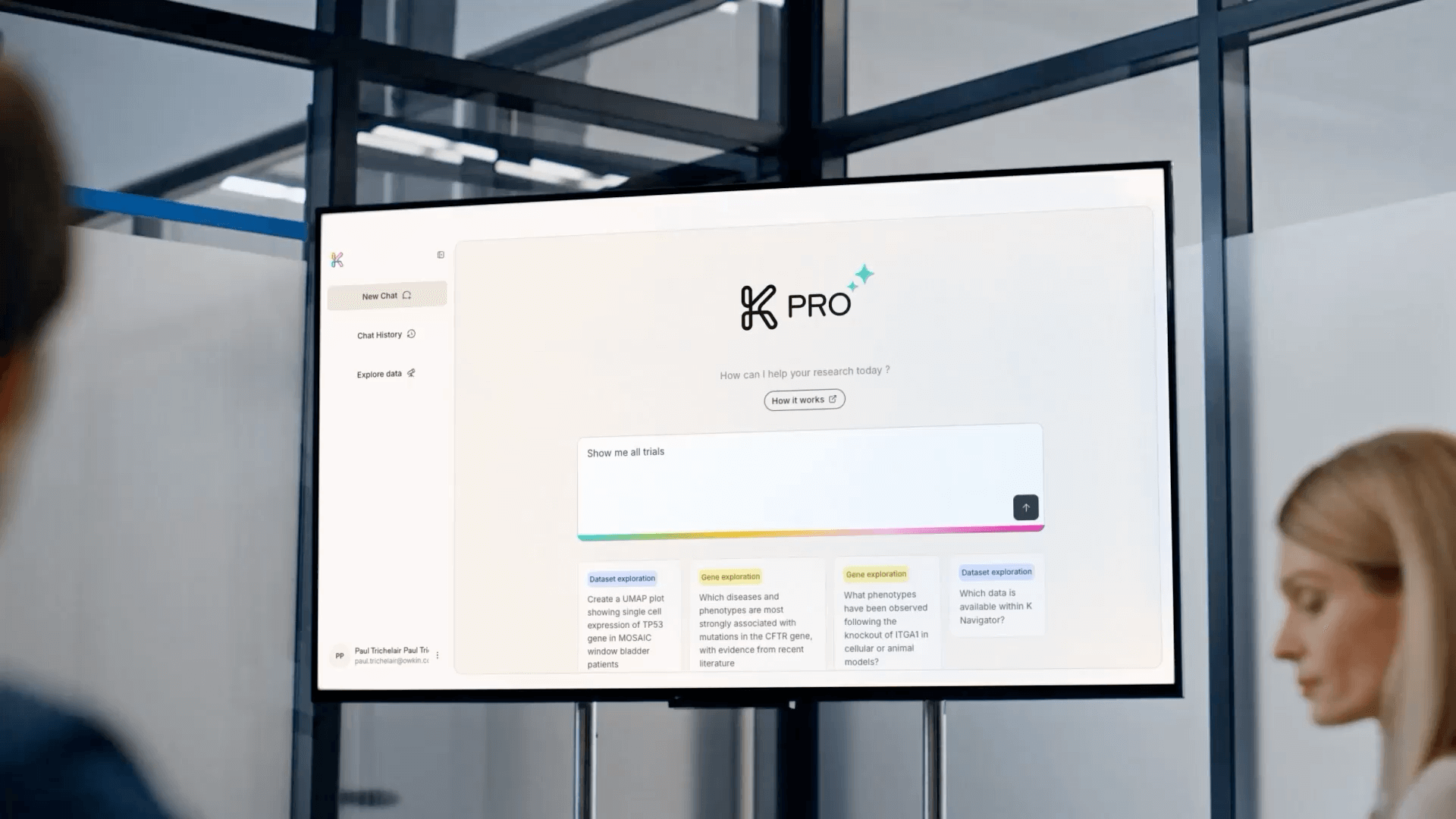

All these methods are interesting in theory - but how does a fully integrated agentic AI system for biomedical research do it in practice? Let’s look at Owkin’s K Pro, our AI agent platform due to be launched this October, as a useful AI agent case study.

Protecting your data with Owkin’s K-Pro

A typical K-Pro user might work in the pharmaceutical or biotech industry. This user may want to analyse their own data with K-Pro’s. One way to do that is uploading their data into K-Pro - a “Bring-your-own-data” approach. Our user documents their data, ensures K Pro can read the data’s modalities, and facilitates transfer of the data from the user institutions cloud storage to K Pro’s. The data will be available in minutes - or longer depending on its complexity and AI readiness. So how do we maintain security throughout this process?

Our system is designed for “bring-your-own-data” without the breach. We focus on strict tenant segregation, predictable cost controls, and repeatable data‑in pipelines, backed by a comprehensive risk register and a scoped technical implementation.

K Pro’s permission system is designed so agents inherit user and organizational entitlements. These can be restricted per dataset, tool and action. The goal is auditable, revocable, least‑privilege execution (including for external collaborators) to avoid “agent sprawl”.

We lean on cloud‑native data planes for scale and observability so Agentic AI activity can be easily tracked. In the future, K Pro will allow users to audit their data to see how it has been accessed and used.

When integrating LLMs or third‑party services, we choose hosting options that guarantee privacy for inputs and outputs and we run formal risk assessments at every major change.

We can use federated data access when needed, for example when building federated external control arms (see our recent Nature paper). Where data cannot move, we bring computation to the data using federated learning and related patterns. This enables our agents to aggregate insight without centralising patient data.

We comply with the highest levels of external audit. We operate under ISO 27001 for information security, with recurring internal and external audits. ISO 27001 requires documented policies, frequent risk assessments, ongoing training, leadership involvement, and periodic review to keep security measures effective against evolving threats. Operationally, day in day out we run automated cybersecurity monitoring tools, threat and vulnerability detection and remediation. This is the backbone of our continuous data protection strategy.

So what now for agentic AI?

Data protection and governance remains the defining constraint for agentic AI in healthcare. The systems we trust with biology must be secure by default, private by construction and transparent in operation.

This is the first major hurdle for agentic AI providers to overcome in order to make agentic AI a standard tool within the pharmaceutical industry. First the industry needs to trust its data will be safe in the hands of the new agents. Then agentic AI’s true utility will start to show itself.