Harmonization

To create the very large datasets used to train AI models, researchers often have to combine lots of different data collected by different healthcare or research organisations and sometimes even by different countries.

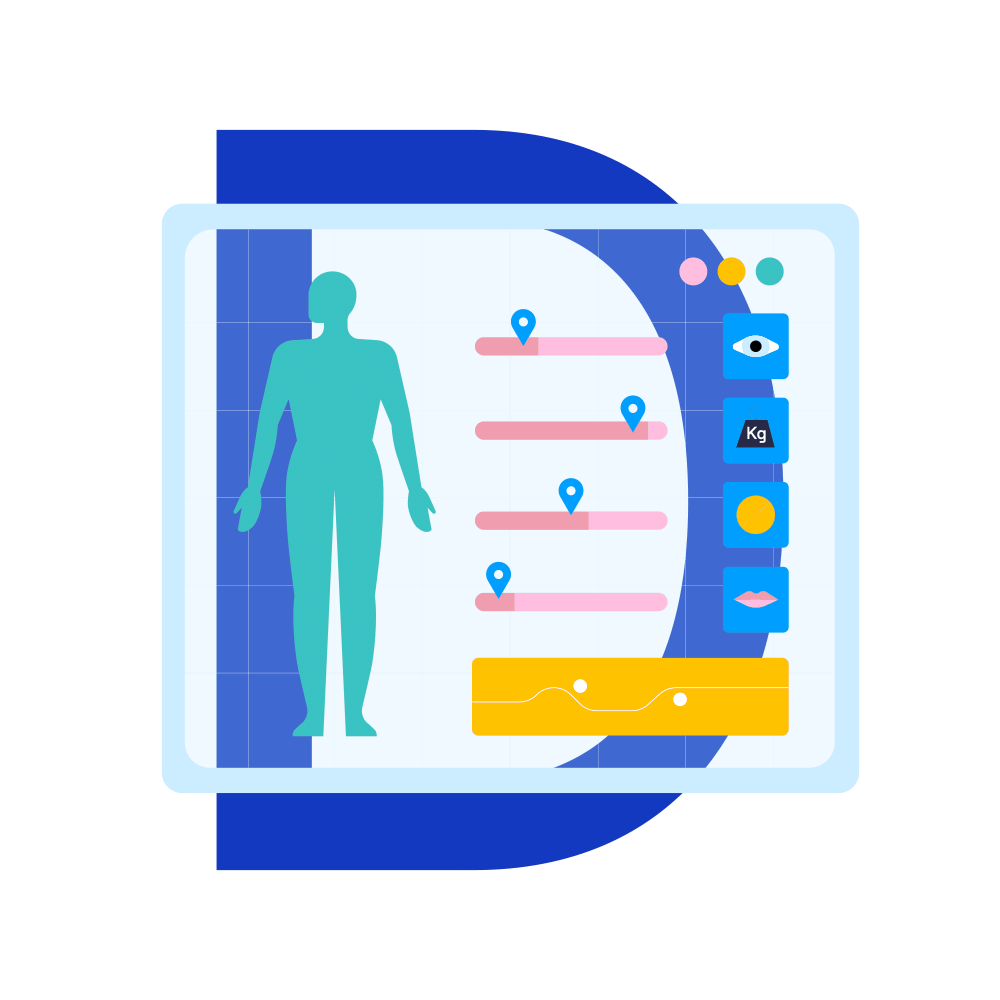

This process of bringing different data together can sometimes be difficult because different organisations and different countries do not always record the same piece of information in the same way. For example, in the UK the weight of a person would normally be recorded in their health record in kilograms (e.g., 60 kgs) but in the US this would be recorded in pounds (e.g.,132 lbs). These kinds of differences might seem small to a human, but they can cause big problems in machine learning research. A computer would not automatically convert these differences if they are simply listed as numbers in a single column on a spreadsheet, potentially leading to mistakes in research findings.

Data harmonization is the process of bringing together data from different sources and making sure all the pieces of information are recorded in the same way so they can be harnessed for use in research. For example, making sure all the weights are changed from kilograms to pounds. This process makes the different data fit together better and so reduces the chances of mistakes or inaccurate outcomes.

Here’s how it would work for a breast cancer research study combining data from two different hospitals:

- The Principal Investigators (PIs) define the inclusion criteria (who will be included in the study). For example, you can’t compare triple negative breast cancer with other breast cancer types because the underlying biological phenomena are likely to be different.

- Researchers determine the appropriate tradeoff between very homogenous datasets (easier to train models) and very diverse datasets (requiring more harmonization but usually leading to more generalizable results) to answer the scientific question.

- Researchers compare datasets from the two hospitals to see if one side may have some data missing and hunt internally to fill any necessary gaps.

- Before training an AI algorithm on the datasets, researchers ensure that variables are similarly coded. For example, if Hospital A codes ages as ‘number of years’ and Hospital B codes ages as a range (e.g. ‘under 18’), researchers transform Hospital B ages to ‘number of years’ so that a computer may recognise them as the same kind of data and be trained on it.

- Data quality checks are performed before starting machine learning performance tests.

- Performance tests are run before launching full scale training for the research study.

To create the large datasets used to train AI models, it’s often necessary to combine, link, or integrate two or more different datasets originally collected or produced at different times, by different organisations, or in different countries. This combination process can help mitigate issues of bias, increase sample study size, and increase statistical power. However, it can be a challenging process as individual datasets may contain variables that measure the same construct differently.

For example, in one dataset ethnicity might only be recorded as British but in another it might be recorded as Welsh, Scottish, or Northern Irish. Or similarly, in one dataset weight might be recorded in kilograms, but in another it is recorded in pounds. Such inconsistencies make it far harder to compare datasets of different origins and to create a single source of the truth. If not corrected, such inconsistencies can result in model training errors including overfitting, which compromise the research results.

Data harmonisation aims to help researchers overcome these kinds of issues undermining the compatibility and comparability of different datasets by processing and transforming different datasets so that they all fit within the same data schema. This might, for example, involve ensuring that all categorical and binary variables use the same label for the same concept (such as ethnicity) and all continuous variables are measured using the same scale.

The process of data harmonization can be conducted prospectively or retrospectively. Prospective data harmonisation is the less common but simpler of the two options and involves ensuring all data are collected in the same format from the outset e.g., by designing electronic health record (EHR) systems that insist weight is always recorded in kilograms. As most hospitals collect data for use by humans and not computers, retrospective data harmonisation is far more common; however, it’s more technically complex since it involves the processing of already collected data.

Retrospective data harmonisation involves a number of steps, including defining an agreed core set of variables, assessing the different datasets for comparability, modifying the data to make them more similar (e.g., manually altering the variables from lbs to kgs), and validating the outputs. This is a very resource intensive process that may have to be repeated multiple times if the final integrated dataset is to be used for multiple purposes (e.g., disease surveillance and the monitoring of long-term clinical outcomes).

Traditionally, the process of data harmonisation was conducted manually by data scientists. Now, the process often also involves the use of computational data harmonisation tools. This computationally-enhanced process may be more efficient, but it’s important to remember that all data harmonisation processes must be well documented otherwise meaning and value can be lost. Furthermore, it is not always possible to document processes that happen inside a computational ‘black box’ (if, for example, a machine learning algorithm is used for data harmonisation purposes).

An Owkin example

Many studies in the field of healthcare AI are monocentric - collecting data from a single center with all patients, samples and measurements gathered in the same place - in order to bypass potential harmonization issues due to heterogeneity. However, conducted in a single location often do not generalize to other contexts and are more susceptible to biases which limit the accuracy or validity of the results.

Owkin works closely with partner institutions using multiple methods to generate and/or curate ‘AI-ready’ datasets, reducing harmonization challenges from the outset. Once a project has kicked off, Owkin data scientists work hand in hand with PIs at the centers involved to ensure data quality before starting any machine learning performance tests. We have found that this process should be iterative and involve different experts in order to produce robust and reliable results from other sources.

Further reading

- Adhikari, Kamala et al. ‘Data Harmonization and Data Pooling from Cohort Studies: A Practical Approach for Data Management’. International Journal of Population Data Science 6(1): 1680.

- Nan, Yang et al. 2022. ‘Data Harmonization for Information Fusion in Digital Healthcare: A State-of-the-Art Systematic Review, Meta-Analysis and Future Research Directions’. Information Fusion 82: 99–122.

- Schmidt, Bey-Marrié, Christopher J. Colvin, Ameer Hohlfeld, and Natalie Leon. 2020. ‘Definitions, Components and Processes of Data Harmonization in Healthcare: A Scoping Review’. BMC Medical Informatics and Decision Making20(1): 222.