Overfitting

Overfitting is a concept that is closely related to generalisability. An algorithm is described as being generalisable if it works equally well in more than one setting (such as two or more different hospitals). If an algorithm fails to generalise (i.e. fails to work in any other setting than its original setting) then that is likely to be because of overfitting.

Overfitting occurs when an algorithm becomes overly familiar with the data it is shown during training and memorises everything about it, including irrelevant details. This over-familiarity means that when the algorithm is exposed to new data (e.g., new patients in a new hospital), it becomes confused if the new data does not contain all the same details, including those irrelevant details.

A famous example of this is an algorithm trained to tell the difference between huskies and wolves. During training, the algorithm could always correctly identify the animal it was shown. However, once it was given a new dataset, it often got the answer wrong.

When the researchers investigated why this had happened, they realised that the algorithm had learned that all the images of wolves it was shown during training also featured snow in the background. So, when the algorithm was shown images of wolves on grass it did not know they were wolves. It assumed that wolves were only wolves if they were standing in snow.

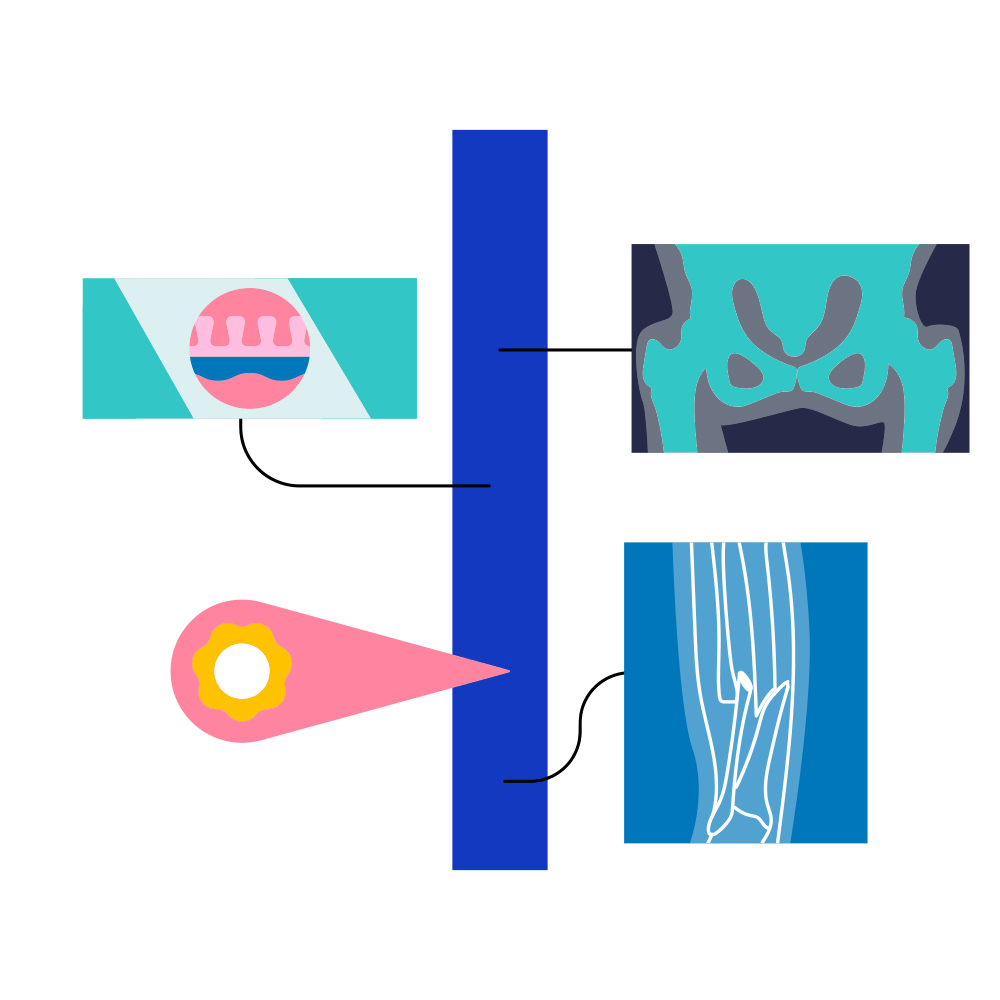

In healthcare, a similar thing might happen if an algorithm trained to recognise breast cancer only ever sees scans taken by a particular model of scanner during training. It might ‘learn’ that breast cancer = scans taken by a particular model. When the algorithm is used in a different hospital with a different scanner, it can no longer recognise breast cancer because crucial elements (e.g., specific image details generated by the scanner model such as colour pattern or image resolution) are missing.

Overfitting is a concept that is closely related to generalisability. When an algorithm fails to generalise, it’s most likely to be because of ‘overfitting’, which is when an algorithm ‘fits’ so closely to its training data that it begins to learn noise and irrelevant details that may not be present in new data.

If an algorithm is ‘overfit’ it may perform well when tested in ‘the lab’ but might underperform when used in ‘the clinic’ and presented with new real-world data that might be different from the data used during training in the lab. If an algorithm was trained on imaging data derived solely from a particular model of a CT scanner, for example, and then deployed in a hospital using a different model of CT scanner, its performance is likely to drop significantly. Unless such issues are identified, they can present serious threats to patient safety.

Fortunately, there are several methods for mitigating the risks of overfitting:

- Increasing the size and diversity of the training dataset

Either through aggregating more datasets, or via federated learning. - Data augmentation

Adding new data, or noise, to a training dataset at pre-agreed intervals. - Feature selection

Identifying the most important features within the training dataset and eliminating those that are irrelevant or redundant (for example removing the snow in the wolves vs. huskies example). - Regularisation

Sometimes overfitting occurs because an algorithm becomes too complex. Regularisation refers to the process of identifying and reducing noise within the data, when feature selection is not possible (for example, when it’s not known which features are the most relevant in advance).

These methods will improve the generalisability of a model upfront. However, it’s always possible (due to issues such as dataset drift) that generalisability will degrade over time. This is why it is important to continuously monitor the performance of an algorithm after it has been deployed so that generalisability errors can be noted and dealt with.

An Owkin example

Further reading

- Challen, Robert et al. 2019. ‘Artificial Intelligence, Bias and Clinical Safety’. BMJ Quality & Safety 28(3): 231–37.

- Farah, Line et al. 2023. ‘Assessment of Performance, Interpretability, and Explainability in Artificial Intelligence–Based Health Technologies: What Healthcare Stakeholders Need to Know’. Mayo Clinic Proceedings: Digital Health1(2): 120–38.

- Futoma, Joseph et al. 2020. ‘The Myth of Generalisability in Clinical Research and Machine Learning in Health Care’. The Lancet Digital Health 2(9): e489–92.

- Kocak, Burak, Ece Ates Kus, and Ozgur Kilickesmez. 2021. ‘How to Read and Review Papers on Machine Learning and Artificial Intelligence in Radiology: A Survival Guide to Key Methodological Concepts’. European Radiology31(4): 1819–30.

- Wan, Bohua, Brian Caffo, and S. Swaroop Vedula. 2022. ‘A Unified Framework on Generalizability of Clinical Prediction Models’. Frontiers in Artificial Intelligence 5: 872720.