White box vs black box

When doctors promise to ‘do no harm,’ they become legally bound to act in the best interest of their patients and not to abuse the trust that has been placed in them. To uphold this promise, doctors are required to explain any decisions they make – for example, what diagnosis they are giving or what treatment plan they are recommending to their patients. This is so that patients can understand what is happening to them and can give their informed consent.

White box

If an AI algorithm being used as part of the decision-making process, for example to help in diagnosing patients, it is still important that the doctor can explain the ‘decision’ (e.g., the classification or prediction) made by that algorithm. Some types of algorithms are ‘naturally’ explainable or easy to understand. Decision tree algorithms, for example, follow a logical ‘if this, then that’ pattern.

For instance, if a patient has a cough, a high temperature, a sore throat, and has lost their sense of smell, the algorithm may ‘diagnose’ the patient as having COVID-19. These types of algorithms are known as ‘white box’ algorithms because it is easy to ‘see’ into them.

Black box

Other types of algorithms are much harder to understand or explain. Neural networks for example have ‘hidden’ layers that complete calculations or follow logical rules that are unknown or unexplained. For instance, if an algorithm is given a patient’s genetic data, electronic health record data, imaging data, data about their lifestyle and uses all this to decide whether that patient is at risk of developing a specific type of cancer in the future, it might not be possible to know exactly what rules it followed to reach a decision. These types of algorithms are known as ‘black box’ algorithms because it is difficult to see into them.

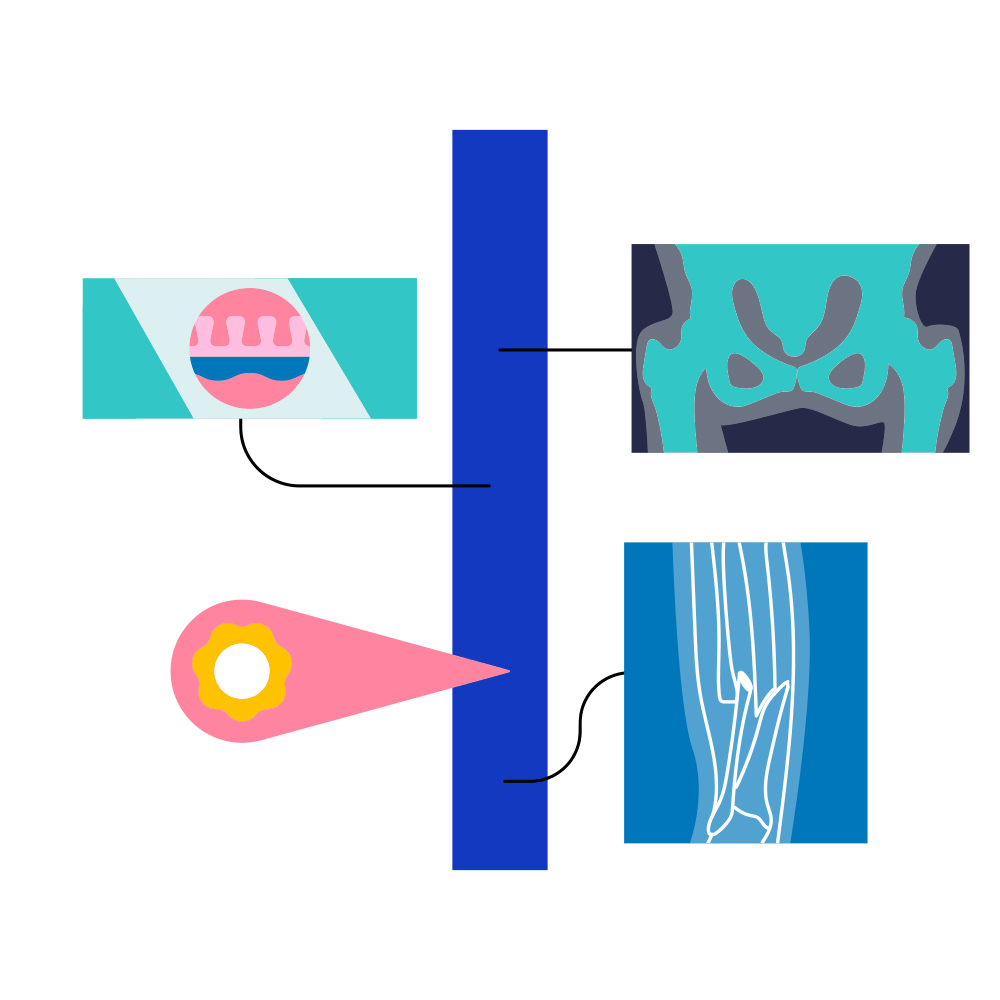

As it’s important for doctors to be able to explain how algorithms make decisions, it might seem that ‘black box’ algorithms should not be used. However, black box algorithms are sometimes more accurate (i.e., better at the job) than white box algorithms. When this is the case, a black box algorithm can be used and made ‘explainable’ after it has been developed using techniques developed by the XAI (or explainable AI) community. These kinds of techniques might, for example, include highlighting on an image what pixels an algorithm is using to make its ‘diagnosis.’

When doctors promise to ‘do no harm,’ they become legally bound to act in the best interest of their patients and to not abuse the trust that has been placed in them. To uphold this promise, doctors are required to explain any decisions they make – for example, what diagnosis they are giving or what treatment plan they are recommending to their patients. This is so that patients can understand what is happening to them and can give their informed consent.

If an algorithm is being used as part of the decision-making process – for example, if it is helping to diagnose the patient – it is still important that the doctor can explain the ‘decision’ (e.g., the classification or prediction) made by that algorithm. In other words, it’s important that all AI algorithms used in healthcare are ‘explainable.’ However, not all algorithms are equally ‘explainable’.

White-box algorithms are ‘transparent’ or ‘explainable’ by design. These are typically models based on patterns, rules, or decision trees that are written in easily interpretable language and can be understood by data scientists or – with training – medical professionals without any modification. Specific examples of white box models include: Generalised Additive Models (GAM), Generalised Additive Models with Pairwise Interactions (GAM2), Bayesian Rule Lists, and Case Based Reasoning algorithms. Whilst these models have a distinct advantage from a ‘natural’ transparency or auditability perspective, they can sometimes struggle to match the predictive accuracy of more complex ‘black-box’ algorithms, particularly when the relationship between variables involved in the prediction is non-linear or conditional.

Black box algorithms are models containing complex mathematical or statistical calculations, involving non-linear or conditional relationships, that cannot be explained or understood simply by inspecting the model code. These models include artificial neural networks, convolutional neural networks, support vector machines, and many others. Although the inscrutability of these models can be problematic, they often produce more ‘accurate’ predictions. This difficult trade-off between explainability and accuracy has given rise to the field of XAI or eXplainable AI which focuses on the development of techniques that can be applied to black-box algorithms after they have been designed to provide a post-hoc explanation of the algorithm’s decision-making process.

An Owkin example

Mesonet is a ‘white box’ model developed by Owkin that can be fairly easy to explain. It uses deep neural networks to split images into many small tiles and group them into clusters with similar morphology. It then identifies regions in the stroma predictive of mesothelioma prognosis, which can be used to define new subtypes and help guide patient treatment. Mesonet predicts with 94% accuracy transitional mesotheliomas that are challenging for pathologists to identify.

Further reading

- Loyola-Gonzalez, Octavio. 2019. ‘Black-Box vs. White-Box: Understanding Their Advantages and Weaknesses From a Practical Point of View’. IEEE Access 7: 154096–113.

- Panigutti, Cecilia et al. 2021. ‘FairLens: Auditing Black-Box Clinical Decision Support Systems’. Information Processing & Management 58(5): 102657.